Simulation and

Coordination of

Mobile Robots

- Object

Reconstruction Using Large Quantities of Cube Robots (Honours project

of Sehwan Lee)

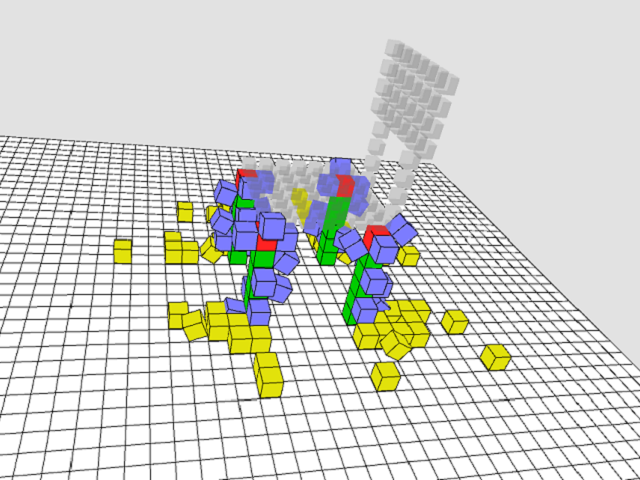

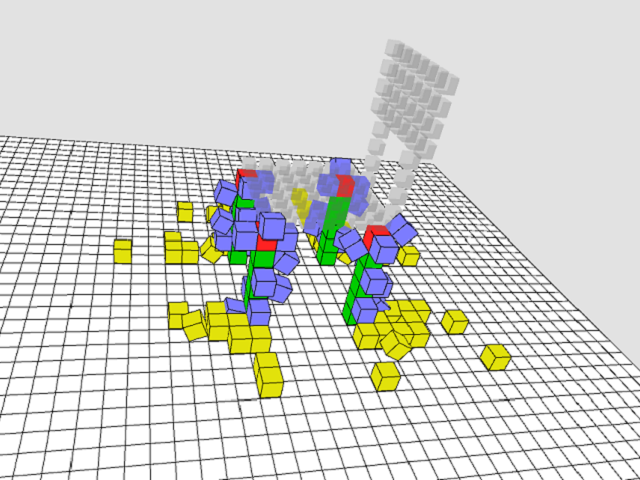

Consider

the

scenario

in

which

we

placed

a 3D environment scanner into an

arbitrary room with various everyday objects (e.g., furniture) and

produced a 3D model of what is in the room. Now letting

thousands of

small cube-shaped robots into an empty room which has the same

proportions as the scanned room. We are investigating

algorithms in

which the robots will autonomously re-build a physical 3D

representation of the model that was scanned in. This is

similar to

the idea of automatically having the robots coordinate and merge

together to build a physical lego-like (or minecraft-like) structural

representation of the actual room with the furniture. The

robots need

to be designed in a manner in which they can flop around as well climb

one another and attach themselves together in a manner that is

structurally sound. We are working on simulations of such

robots at

this time.

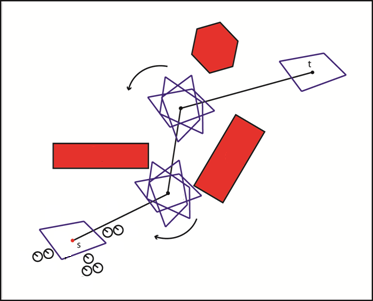

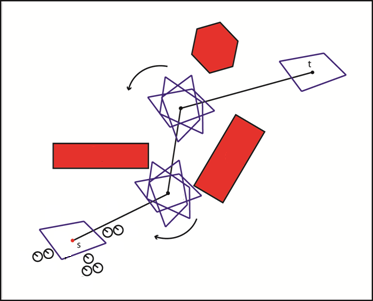

- Coordinated Object Pushing by a Team of Robots (Masters Thesis of Pierre Chamoun)

Mobile

robots

can

be

used

to

solve various real-world problems without human

supervision. Teams of robots are sometimes required because

the a single robot may not have enough power or agility to manipulate

large heavy objects such as pushing heavy items in a factory,

transporting dangerous goods, or pushing a boat into a

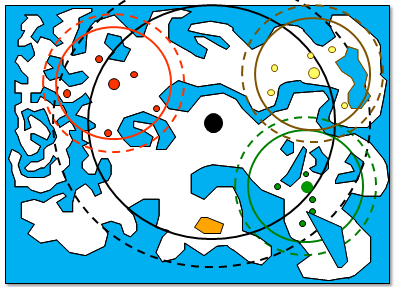

port. We have been researching algorithms to coordinate a

team of autonomous mobile robots to solve the task of pushing an

arbitrary 2D polygonal-shaped fixed-weight object from one location to

another in a 2D environment with various polygonal objects.

The problem requires the robots to place themselves around the

push-object efficiently so as to be able to transport the object

smoothly and as quick as possible along a computed path

trajectory. Depending on the number and placement of the

obstacles, the problem usually requires the push-object to be rotated

at various times during the transportation (much like having to adjust

the orientation of a couch being moved into an apartment).

The robots must have a strategy for re-positioning themselves

efficiently around the push-object as well as having a strategy to

coordinate the efforts of all the robots so that the push-object is

always moving in the correct direction.

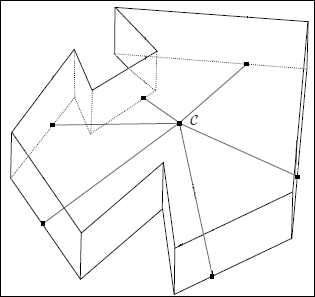

- Weight Distribution for a Team of Lifting Robots (Masters Thesis of Mike Doherty)

When

teams

of

robots

are

assigned

to lift a large heavy object (e.g., for

transportation purposes), it is important that the weight is

distributed among the robots. Proper weight distribution

will help to minimize the number of robots required to lift the object

while ensuring that the robots are less likely to be overburdened,

which could lead to hardware failure as well as increase the likelihood

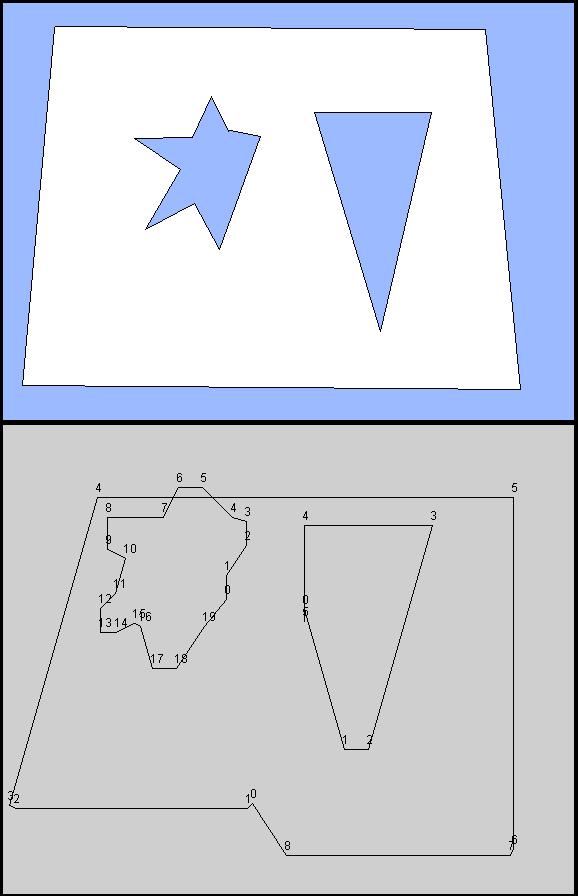

of dropping the object being transported. We have been

investigating algorithms for distributing the weight of a 2D polygonal

uniformly-weighted object by determining efficient placement of a team

of robots based on the center of gravity of the object.

- Hierarchical

Robot

Coordination

Individual

mobile

robots

are

limited

in

their

abilities to accomplish tasks that

require coverage of large areas. For example, if a single

robot had

to search for lost person/device in a terrain, it would be quite

difficult and time consuming. However, if a large team of

robots was

used for the search task, lost person/device would be found much more

quickly, provided that the robots are coordinated in their search

efforts. We performed research into ways of organizing

simple robots

(i.e., very few sensors) into hierarchical arrangements whereby small

teams of robots are managed by a team coordinator at the lowest

level. These coordinators are themselves managed by a

higher-level

coordinator, and so on. At the top level, a human can be

coordinating

the highest level of coordinating robots. This allows

global

knowledge to trickle down through the hierarchy so that the individual

robots can be directed to various areas of the environment more

efficiently and timely. Communication is simplified because

it only occurs between the individual robot and their coordinator,

who directs their efforts. Therefore, if any communication

failure

occurs within the hierarchy, the lower level coordinators can still

function, given their latest search directive.

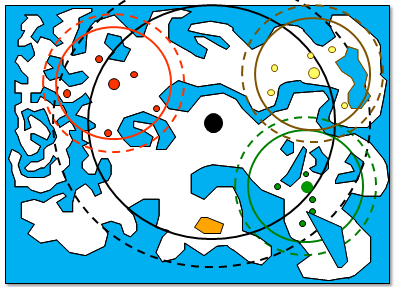

- Simulation of

Robot Mapping in an Unknown Environment (Honours project

of Sophia Ho)

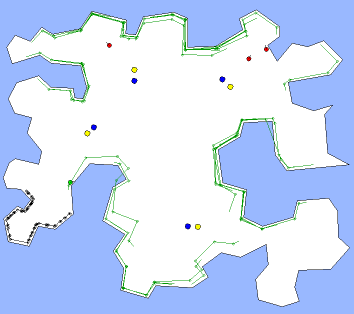

In this

project, we developed an algorithm to allow a robot with simple sensors

(e.g., whiskers and a compass) to create a map of an unknown 2D

environment with obstacles. The robot is able to trace out

the border of the environment as well as each individual obstacle to

produce polygonal representations of the environment. Since

the information comes only when travelling along obstacle borders,

there is no information available while the robot is travelling in the

open spaces of the environment. Hence, "theoretical bridge"

connections are introduced between various polygons to obtain relative

positioning between objects.

- Robot Colony

Simulation (Honours project of Jason Brink)

This project simulated a colony of robots that were able to interact

together to perform various tasks. One task that was implemented

was that of coordinated mapping. Each robot produced a

portion of the environmental map and then these maps were merged

together to produce a global map.

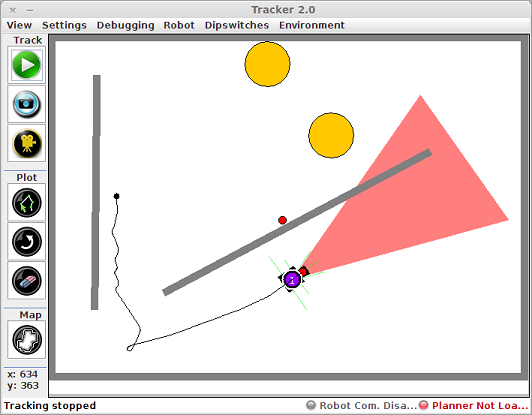

- PropBot Simulator (Honours

project of Darryl Hill)

A 4th year robotics

course here at Carleton made use of a limited number of PropBots (i.e.,

robots designed for the course) which were used within our robotics lab

test environment. In order to test robotic algorithms outside of

the lab setting, this software simulator was implemented which allowed

students to work at home to test their software in simulated robots

before coming in to the lab. The simulated robots were

developed to closely correspond to the actual PropBot robots in regards

to movements and sensors.

- Robot Colony Simulator (graduate course project)

This simulation tool

was developed to simulate colonies of robots that use very simple

instinctive neuron networks as those in my masters thesis robot.

This tool allows a user to create different kinds of cleaning robots

that can clean near well lit areas, near other robots and near

both. I created colonies with different behaviours by varying the

types of robots such as swarm leaders, followers and light directed

cleaners. By observing the behaviour of these robots I was able

to determine which types of robots performed well together. A

more important result is that the robots are able to perform well

together even with minimal sensor complexity.

The robots have very simple sensors such as detecting light and signals

from other robots. It allows the user to investigate different

kinds of swarm behaviour and see how they apply to simple tasks such as

cleaning an environment. The program has nice interface that

allows the user to add sensors and actuators to the robots and code

their behaviour at run-time. In addition, users can design

instincts as networks of neurons by using a graphical drag and drop

interface. The system allows the user to make libraries of

robots, sensors, actuators and instincts. The screen snapshots

below show the dialog boxes for creating robots, sensors and instincts:

- Motivation System (graduate course project)

This project

investigated the various mechanisms that motivate behaviours in A.L.

(artificial life). The system simulates a colony of bugs whose

main goal is to remain alive. The bugs have attract/repel

behaviours to various kinds of stimuli (such as different foods, bugs

of the same or different sex, light sources and gravel patches).

The bugs are able to do multi-level associative learning in their

behaviour hierarchy which allows them to stay alive.

The user can add multiple bugs to the environment as well as various

types of stimuli. The user can poison certain foods and or zap bugs

when they are doing something considered "bad". For example, the user

can stop male bugs from chasing female bugs or stop a bug from eating

apples that are on gravel patches near light sources. Some very

interesting behaviours emerge from varying the stimuli and punish

patterns. As a bonus feature, there is a brain browser (see image

below) that allows the user to examine the brain of a bug to view the

hierarchical behaviour mechanisms that led it to behave in a certain

manner as well as observe the associative learning that has taken place.

- Neural Network-Driven Robot Insect

(graduate course project)

This project involved

implementing an insect that learns how to coordinate its legs to

achieve walking by using back propagation neural networks. Each

leg has its own network that indicates whether the leg should be in

stance mode (down and pushing backward) or swing mode (up and swinging

forward). The network of each leg connects to the adjacent legs

across and behind it. When the insect falls down (i.e. not enough

legs are in stance mode or the center of gravity is off balance), then

the leg networks are "punished". The leg networks are all

rewarded when the insect makes forward motion. When created, the

insects are endowed with a certain amount of pre-learnt

instincts. After about 20 seconds (which varies depending on the

amount of instinctive learning), the insect is able to coordinate its

legs and walk. The user can then pluck off some of the legs and

watch as it attempts to compensate for the lost limbs. As an

additional feature, the insect has two back propagation networks linked

to light sensors. The insect is able to quickly learn how to seek

out light sources.