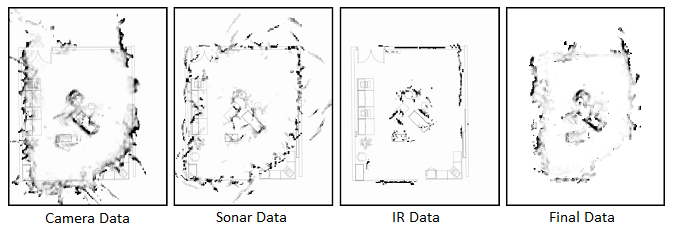

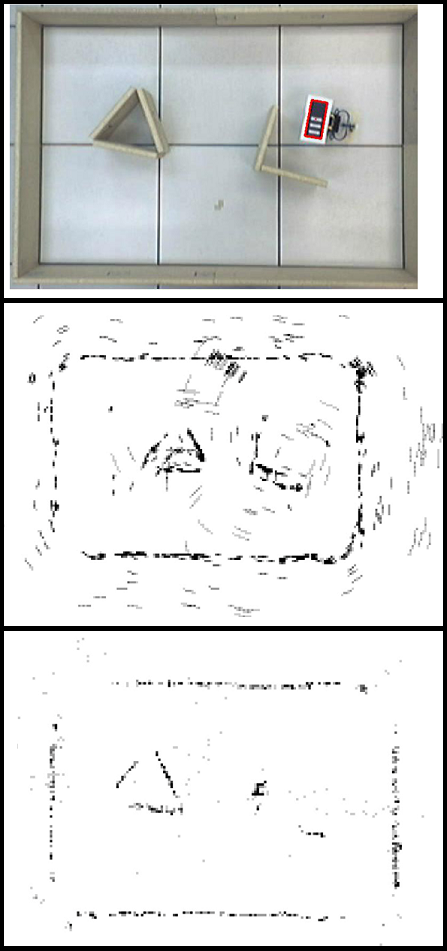

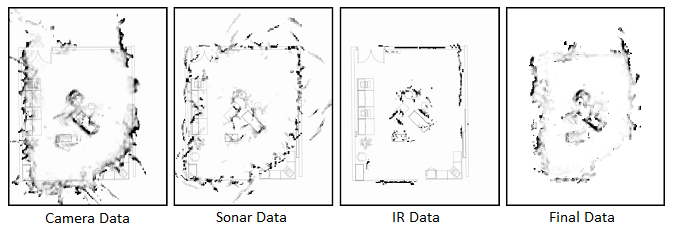

Vision-based

sensors

such

as

stereo

cameras,

are

often used on mobile robots for

mapping and navigation purposes. Cameras provide a rich set of

data

making them useful for object recognition, localization and detecting

environmental structure. When obtaining range measurements, however,

stereo camera vision systems do not perform well under some

environmental conditions such as featureless regions (e.g., blank

walls), large metallic or glass surfaces (e.g., windows) and low

lighting scenes. We have investigated the improvement of range data

obtained from a stereo camera vision system through the fusion of

additional sonar and infrared proximity sensor data. Our results

showed that through sensor fusion, we were able to discard 49% of the

camera data as noise and improved our perimeter mapping by filling in

12% of the perimeter which was missing from the camera data alone.

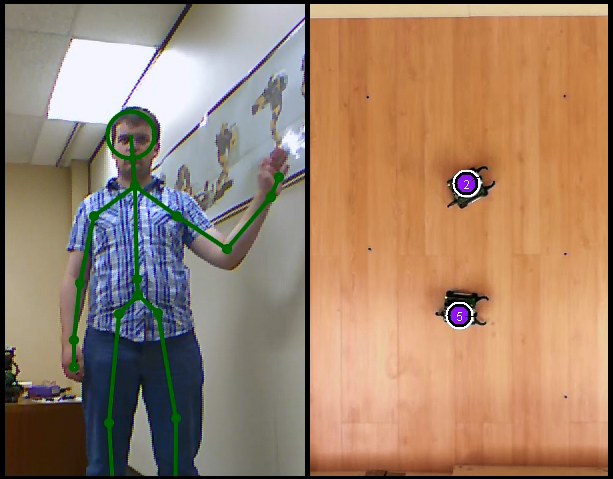

- Controlling

Propbots With Microsoft Kinect (Honours project of Wes

Lawrence)

In

this

project,

we

investigated the idea of controlling a group of robots

through simple gestures and voice commands. Such commands

can suggest to robots how to act, or instruct them to perform

particular

tasks. For example, point to a location, and the robot goes there

... or speak a single robot's “name”, and only that robot pays

attention to you. In our implementation, a Microsoft kinect

device was used to monitor the gestures and voice commands of a human

operator. Certain gestures were recognized as commands to

be sent to a group of robots to coordinate their movements and

tasks. This project was done as a "proof-of-concept" where

just a couple of robots were used (i.e., PropBots built by Mark

Lanthier) and just a few gestures were incorporated.

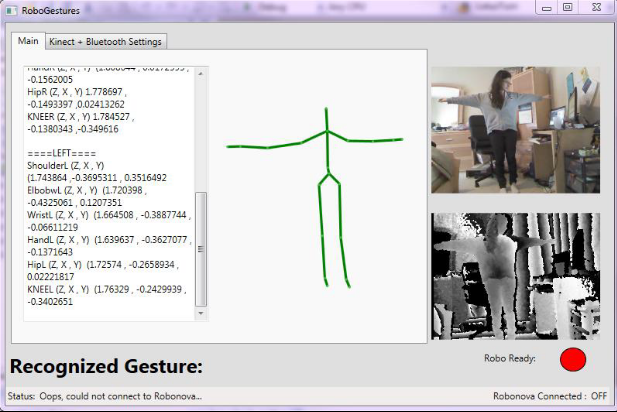

- Wireless Robotic

Imitation of Human Movements Using a Kinect (Honours

project of Joyce Tannouri)

The

main

goal

of

this project was to get a Microsoft Kinect sensor to

recognize user gestures, movements, and poses and then wirelessly have

a ROBONOVA-I mimic them in real-time using Bluetooth technology. Given

the ROBONOVA-I's limitations, using the Kinect's skeletal tracking and

depth capabilities allows to program a wide range of recognizable

gestures that can be performed by the robot. In the end, the robot was

able to combine different gestures and positions together while

maintaining balance and stability for a total of 50 movements.

- Scanning Objects

in 3D With Kinect (Honours project of Mohammed Khoory)

Robots often need to scan 3D environments to recognize landmarks and

create 3D representations of what they see around them. In this

project, we investigated the idea of scanning real-world objects with a

Microsoft Kinect device and representing them as geometric voxel

models. Different methods to help reduce the noise in the

data were explored. By controlling the resolution of the voxels,

the program was able to control the complexity and level of detail in

the resulting model.

- 3D Mapping and

Imaging (Honours project of William Wilson)

It

is

difficult

to

form accurate 3D models from simple 2D camera

data. 3D laser scanners are can produce more accurate 3D

models in terms of shape and size, but such scanners lack texture and

color information. In this project we combined 2D camera

image data with 3D laser scanner data to form 3D models of a

scene.

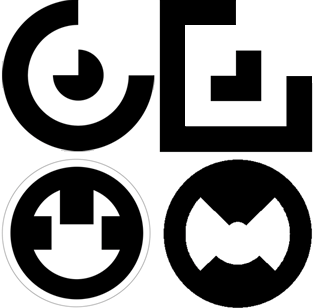

- Robot Tracker

Improvements (Honours project of Sara Verkaik)

GPS

systems give absolute positioning for outdoor vehicles, allowing a

drivers to know where they are within their environment.

However, in a small indoor environment, it is difficult to obtain

absolute positioning for robots because GPS systems do not work indoors

and would not provide adequate accuracy for robotic task

completion. In our lab, we developed an overhead camera

system to track robots based on recognizing various colored

tags. However, a need arose to be able to distinguish more

robots from one another, so a tag-based system had to be

developed. This project investigated various types of tags

and how they performed in regards to proper recognition from the

overhead cameras.

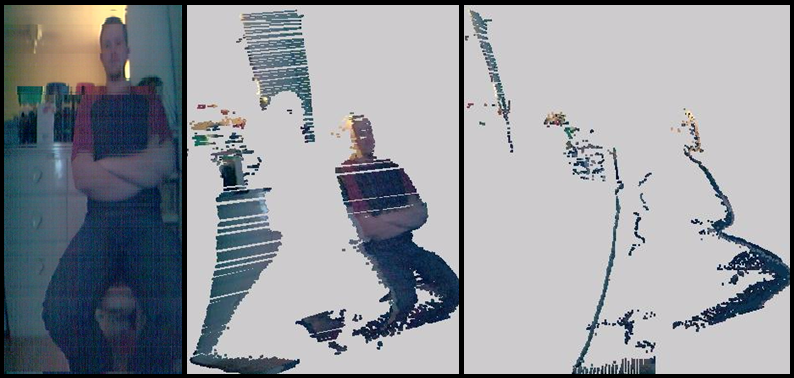

- 3D Scale Depth

Analysis (Honours project of Philip Carswell)

The pictures produced from a stereo

camera may have inaccurate depth data due to lack of contrast. In

this project, a "manual-fix" feature can be used to manually choose

which pixels to fix, and at what depth. Because this process is

impractical and time-consuming, two "automatic-fix" algorithms were

created to fix the depth data. One is based on searching for the

closest correct depth data to replace the faulty one, while the other

averages depths surrounding the faulty pixel in an N by N area, which

is specified as input to the algorithm.

- Map Generation

Ultrasonic and IR Sensory Data (Honours project of Jason

Humber)

This project investigated the accuracy

of maps that could be obtained by using a Sharp GP2D12 IR distance

sensor and a MindSensors Ultrasonic Proximity Sensor mounted on a robot

whose position was tracked by an overhead camera tracking system.

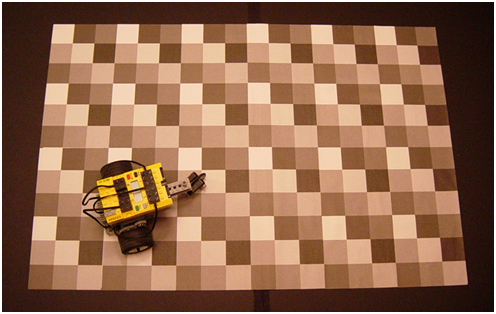

- Spatial Cognition

Using a Specialized Grid (Honours project of Dwayne

Moore)

In this project we investigated the

ability of a robot to keep track of its location within a grid

environment through use of a simple light sensor under the

robot. By using various shades of gray arranged in a

pattern, the robot was able to maintain a fairly accurate estimate of

its position by recognizing when it had moved from one grid cell to

another.